How does the feedback collection mechanism work?

- Getting Started

- Bot Building

- Smart Agent Chat

- Conversation Design

-

Developer Guides

Code Step Integration Static Step Integration Shopify Integration SETU Integration Exotel Integration CIBIL integration Freshdesk KMS Integration PayU Integration Zendesk Guide Integration Twilio Integration Razorpay Integration LeadSquared Integration USU(Unymira) Integration Helo(VivaConnect) Integration Salesforce KMS Integration Stripe Integration PayPal Integration CleverTap Integration Fynd Integration HubSpot Integration Magento Integration WooCommerce Integration Microsoft Dynamics 365 Integration

- Deployment

- External Agent Tool Setup

- Analytics & Reporting

- Notifications

- Commerce Plus

- Troubleshooting Guides

- Release Notes

Table of Contents

What is CSAT?What is a good CSAT rating?How does the Feedback module get triggered?How does the Feedback collection mechanism work?Editing the Feedback Smart SkillWhat are the benefits of Feedback collection?Where can we check the Feedback Analytics?What to do if your Feedback ratings are low?User Feedback is an asset

User feedback is very critical for any business. It tells you how your bot is performing, and it lets you know if the bot is functioning well or not, whether the bot is able to solve user's queries or not. It gives you an overview of every single aspect with respect to your bot. Though we have numbers on Intelligent Analytics like Bot Automation, Query Completion, Funnel Completion Rate, User Feedback comes directly from the user and is not an induced number thus a better way to make improvements in the bot.

Every new bot that is created on the Conversation Studio tool, will by default have this Feedback Skill in it, with the name Collecting User Feedback - CSAT.

What is CSAT?

CSAT is short for Customer Satisfaction, which is a commonly-used key performance indicator used to track how satisfied users are with your organization's products, in this case, bots. It shows the percentage of satisfied users.

CSAT is an important metric that lets you see what your end users are thinking about the bot.

This metric perfectly compliments the other metrics like “Bot Automation” and “Query Completion” because where these metrics showcase the performance of the bot’s NLU and the conversation flow designs, CSAT at the same time lets you know if the bot is actually helping the end-user or not. The union of these 3 ratings thus gives you a holistic idea of the bot’s actual performance.

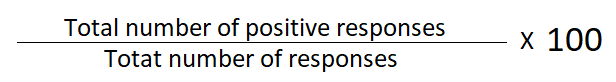

CSAT is calculated by -

Here, the positive responses mean a total number of 4 and 5 ratings received.

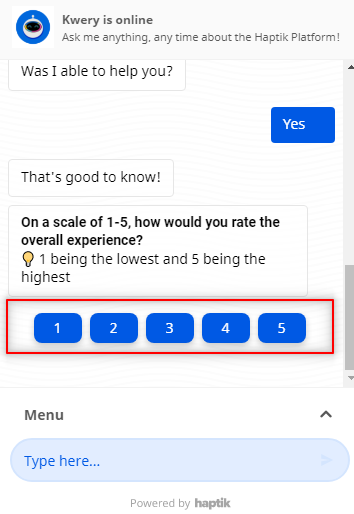

The responses from the users are collected in the following way, as shown -

What is a good CSAT rating?

- A good CSAT rating varies from industry to industry, as claimed by ACSI (American Customer Satisfaction Index)

- A few examples of good CSAT ratings across industries are -

- Airlines: 75%

- Ambulatory Care: 74%

- Apparel: 77%

- Athletic Shoes: 79%

- Hospitals: 69%

- Since we do not specify a certain category for our bots, we can say that on average, above 70% of CSAT is a good CSAT.

How does the Feedback module get triggered?

Feedback is triggered at the logical end of any query. Once the user has reached the logical end of the conversational journey, we ask the user to provide feedback.

You can denote the logical end of the query by marking the step as an End step, or Context-clear step, or End step+ Context-clear step.

On reaching any of these steps, the feedback message "Was I able to help you? Yes or No?" is sent by the platform. If the user replies with a yes or no, the feedback journey gets triggered and the user is taken through the next steps as per the feedback flow.

Can we change the "Was I able to help you?" message?

- No, it comes from our pipeline and it is hardcoded, so you cannot change it.

- You can change the further subsequent replies given by the bot though.

- You need not add it for every step.

If your Bot is multilingual, the language equivalent of Was I able to help you? will be sent. It is a system message, meaning you need not add it for every step.

You can also configure the time delay, for the system message to be triggered through Business Manager > General Settings > User Feedback.

How does the Feedback collection mechanism work?

The bot would ask Was I able to help you?, and the answer to this would be either Yes or No.

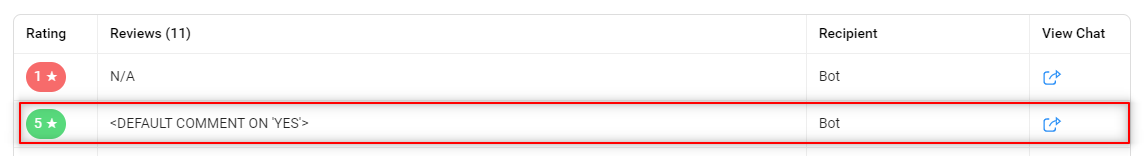

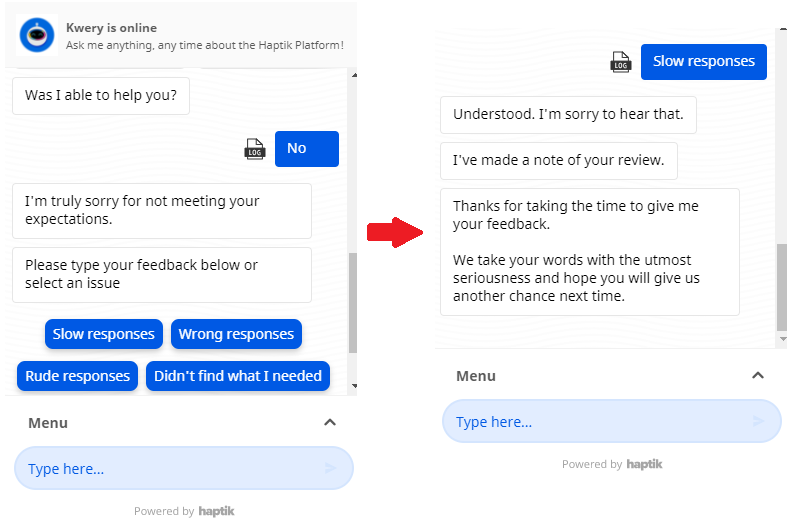

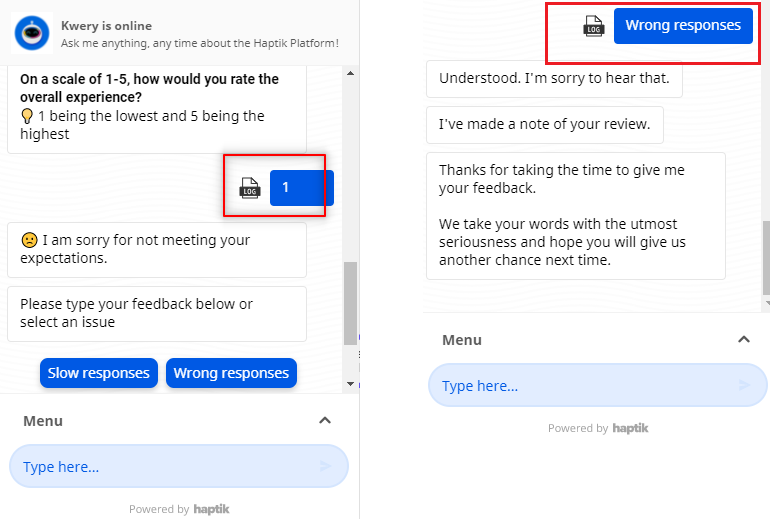

If the user messages No, we take feedback as 1. The bot was not able to help so the rating is 1. Further, the bot tries to take qualitative feedback (comments) from the user, as shown.

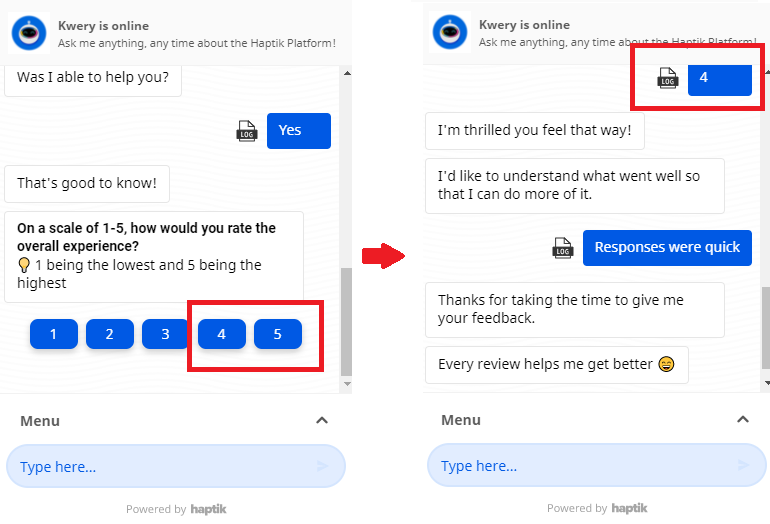

If the user messages Yes, the bot would ask the user That's good to know. On a scale of 1 to 5, how would you rate the overall experience?

For every rating, that the user gives, there is a response available with the bot, so as to maintain the flow for collecting the feedback.

Now, if the user gives a 4 or 5 rating, the bot would respond in the following way -

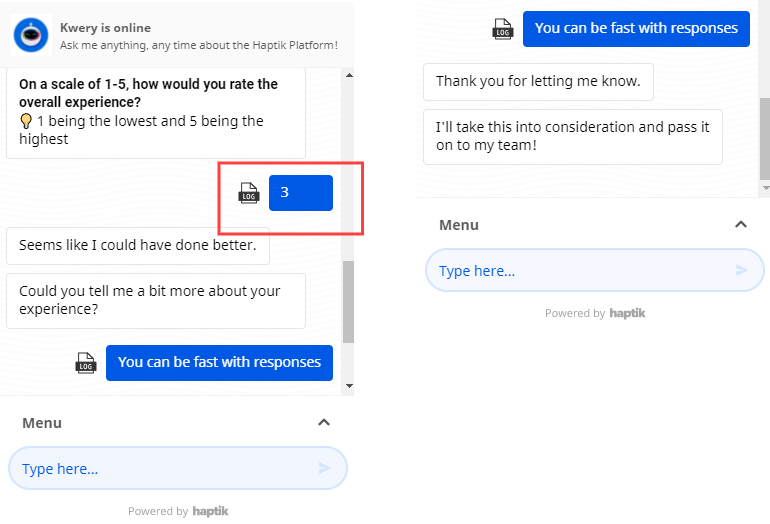

If the user gives a 3 rating, then the bot would respond as follows -

If the user gives a 1-2 rating, the bot would respond apologetically, and the flow would be as follows -

Basically, on every touchpoint, we take quantitative feedback on a scale of 1 to 5 and then, we try to take qualitative feedback through the responses. This maintains the conversation flow.

Editing the Feedback Smart Skill

- In order to edit the Feedback smart skill, you can change the flow in the Conversation Studio tool, or you can also add agent handover depending on your business requirements.

- Other than that, you can make changes in the following domains -

- You can add Steps as required.

- You can edit the User Messages and the Bot Responses within the steps.

- You can make changes to the connections too, you can -

- Add/Remove a connection between steps.

- Change the conditions present in the connection.

- You can also add a Skill if required.

What are the benefits of Feedback collection?

- With the Feedback journey, we noticed that the feedback collection rate increased.

- It is omnichannel. Since it is a journey, it would work on any channel where the bot gets deployed.

- You can add new flows within the journey, you can also alter the Bot Response.

- You can add agent handovers, at an instance where the users give a poor rating.

Where can we check the Feedback Analytics?

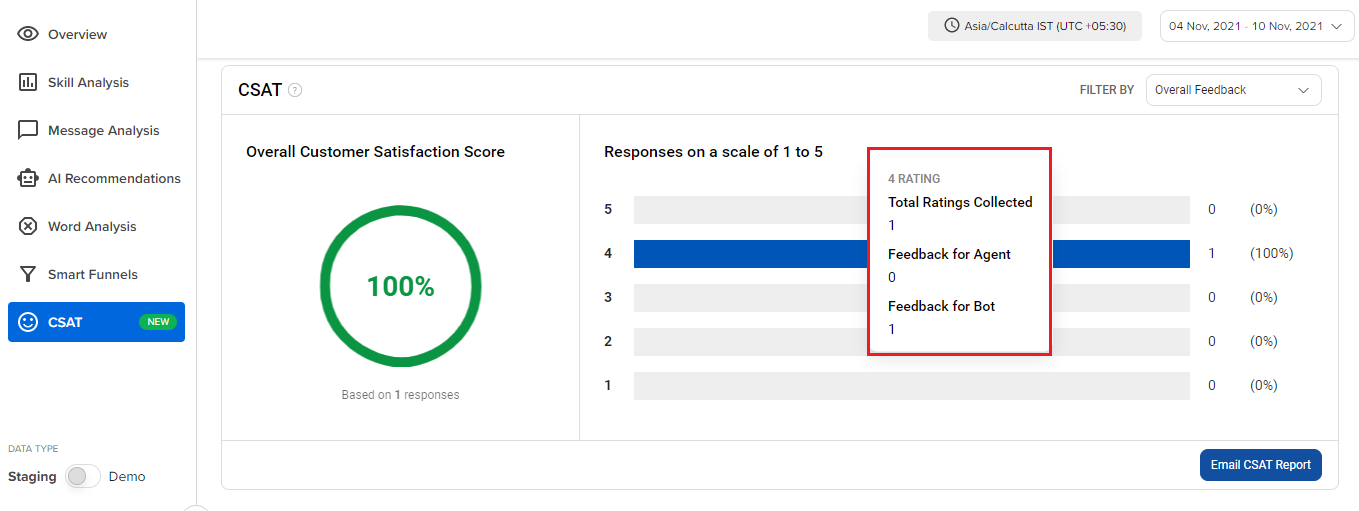

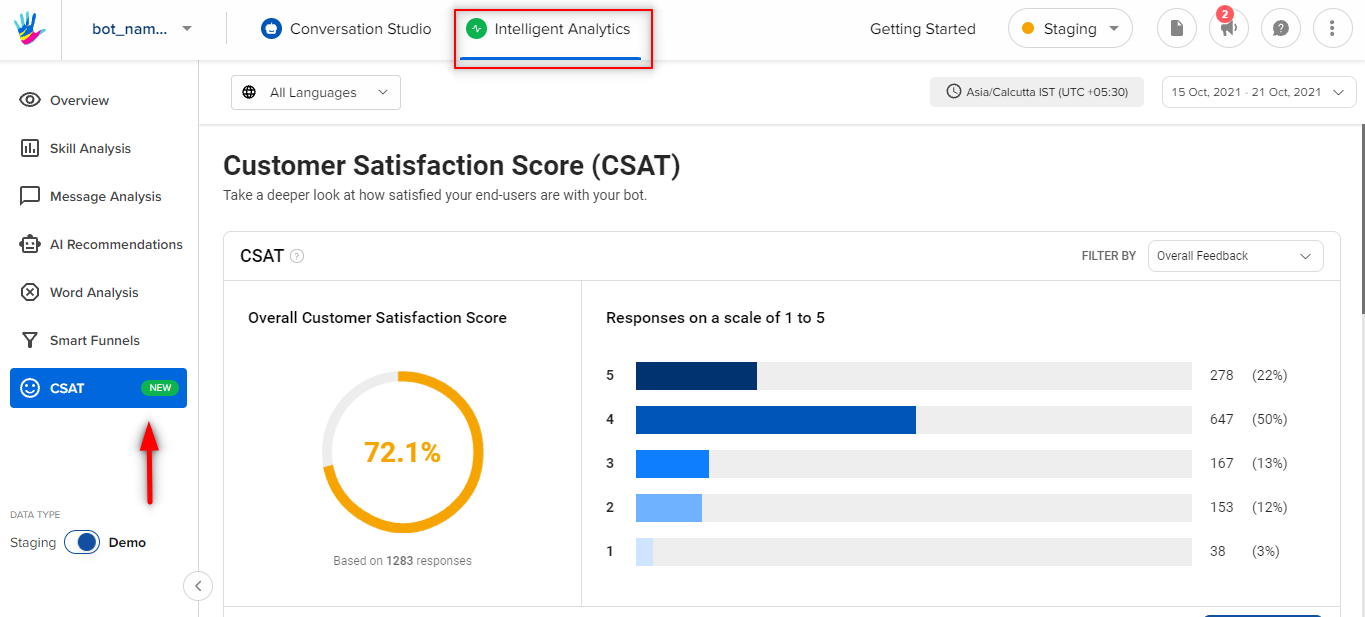

Now that we have seen how feedback will be collected within the bot flow. Let's take a look at how the collected feedback will be viewed on Intelligent Analytics

You need to visit the Intelligent Analytics of your bot and visit the CSAT section. There you will get to see all the ratings your bot has received from the users, as shown -

What to do if your Feedback ratings are low?

If you are receiving a poor CSAT rating, you can start looking for the following points

- Identify the "skills" that are majorly getting a low rating from users.

- The first point to start looking from is the "skillset" column.

- Going over this column should give you an idea of what is the most common starting "skillset" where the feedback is low.

- Go through the comments to get a pattern of common problems.

- Now that you have identified the popular "skillsets", the next thing is to look at them separately and go through the comments to get a general idea of what the overarching problem could be.

- Example: In the Order bot, users had feedback on the Track my order status, i.e. when users would request for the order status, they would receive a very static message, like your order will be delivered in a week, from the day of purchase. Here, the users did not get real-time tracking data. Hence, they would give poor ratings.

- Checking comments do help you understand where the problem lies.

- Go through the chat links to get a detailed understanding.

- Finally, for each "skillset", click on the chat link to go through the conversation that leads the user to leave the low feedback to get a complete idea of what went wrong.

To know more about how to check the CSAT score, click here.