A/B Testing

- Getting Started

- Bot Building

- Smart Agent Chat

- Conversation Design

-

Developer Guides

Code Step Integration Static Step Integration Shopify Integration SETU Integration Exotel Integration CIBIL integration Freshdesk KMS Integration PayU Integration Zendesk Guide Integration Twilio Integration Razorpay Integration LeadSquared Integration USU(Unymira) Integration Helo(VivaConnect) Integration Salesforce KMS Integration Stripe Integration PayPal Integration CleverTap Integration Fynd Integration HubSpot Integration Magento Integration WooCommerce Integration Microsoft Dynamics 365 Integration

- Deployment

- External Agent Tool Setup

- Analytics & Reporting

- Notifications

- Commerce Plus

- Troubleshooting Guides

- Release Notes

Table of Contents

Overview

“A/B Testing” or “Split Testing” is a technique that allows you to split a bot’s Conversational Journey into two different variants viz `A` and `B` so that some of the users coming to your bot get to see Journey `A` and some of the users get to see Journey `B`. The goal here is to help you determine which of these conversational journey variants perform better.

Traffic Split between Variants

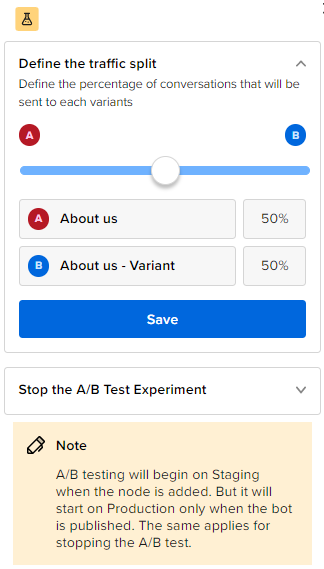

By default, the traffic split between the two variants is 50%-50%. What that means is, 50% of the users coming to your bot will be taken through the Variant `A` Journey whereas the rest 50% of the users will get the Variant `B` journey. You can also customize this split to be 20%-80% or 60%-40%, etc. by modifying the A/B test.

Creating a New A/B Test

- Log in to your bot and navigate to the Conversation Studio panel.

- Click on the A/B Testing button available on the screen. A slide-in window appears from the right displaying instructions to create a new A/B test.

- Select any one static node in your bot for A/B testing and click Start A/B Test Experiment.

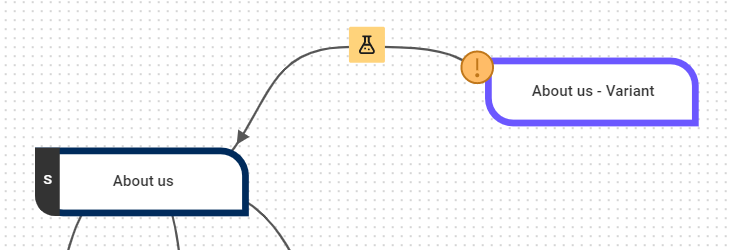

Your variant for the selected static node is now created. You can now create its journey going forward.

Modifying the A/B Test

After the variant of the selected static node is created, define the traffic split by dragging the bar right or left depending upon the percentage of users you want to redirect to each of them. Click Save to save the traffic split.

Measuring the A/B Test

Since A/B testing allows you to split a Conversational Journey into 2 variants, the best way to measure which Journey is performing better is by using the `User Journey` Analytics feature. That means we have to create 2 different User Journeys in Intelligent Analytics. One User Journey for Variant `A` and the other User Journey for Variant `B`.

Stopping the A/B Test

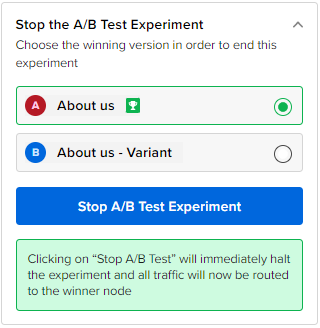

Once the A/B Test has been live, we will have measured and determined which variant `A` or `B` is performing better.

To stop an ongoing A/B test, follow the below steps:

- Click on

icon. The slider window appears.

icon. The slider window appears. - Click on Stop A/B Test Experiment panel. Choose the winning variant. Once the A/B test is stopped, the losing variant will be deactivated.

- Click Stop A/B Test Experiment.