How to test the bot?

- Getting Started

- Bot Building

- Smart Agent Chat

- Conversation Design

-

Developer Guides

Code Step Integration Static Step Integration Shopify Integration SETU Integration Exotel Integration CIBIL integration Freshdesk KMS Integration PayU Integration Zendesk Guide Integration Twilio Integration Razorpay Integration LeadSquared Integration USU(Unymira) Integration Helo(VivaConnect) Integration Salesforce KMS Integration Stripe Integration PayPal Integration CleverTap Integration Fynd Integration HubSpot Integration Magento Integration WooCommerce Integration Microsoft Dynamics 365 Integration

- Deployment

- External Agent Tool Setup

- Analytics & Reporting

- Notifications

- Commerce Plus

- Troubleshooting Guides

- Release Notes

Table of Contents

Training data testingHow to examine the Test Set?1. Using Test and DebugDebug the bot2. Training SuggestionsFunctional testingRegression testingTesting is a method to check whether the actual product matches the expected requirement. Testing also ensures that the product is error-free. It is the most important stage in the process of development as testing helps to identify errors or defects early in the development lifecycle.

In IVA lingo, testing helps businesses ensure the flows are fully functional and ensures if the user's queries are being resolved to buy the bot.

At Haptik, testing is done in three different phases -

- Training data testing

- Functional testing

- Regression testing

Training data testing

In this phase, you can use the following approaches to test the training data -

Before you start testing different components of the bot, let's look at the essentials of testing. We start by preparing a test set for testing.

Prepare a Test Set

Create a comprehensive test set of sentences that you expect your users to say, containing sentences of the following types:

- the sentence should be handled by the bot

- the sentence is

out of scope sentencefor the bot

Don’t add these sentences to any of the user messages

This is in principle with doing Test-driven development (TDD), which is a development technique where you must first write a test that fails before doing any new development.

Execute the Test Set

It's important to remember that the quality of your bot will only be as good as your Test Set. Following notes will ensure the quality of your test cases and hence the quality of your bot.

-

Let’s say you’ve added the following sentences in your User Messages:

- What is SIP

- Tell me about SIP

- How can I know about SIP

-

Then please note that because SIP is occurring in all sentences, then there’s a possibility that the model will end up firing this step even if the user enters:

- SIP.

- SIP + gibberish Eg. SIP bjhfv vbf.

- Therefore, you should make sure your bot is learning the right things by testing it on phrases/words occurring repeatedly in your user messages.

Example: If the sentence is "Benefits of SIP", then test your bot on:

- Benefits of bvdfbv fjdb (Phrase + Gibberish/noise)

- cricket benefits (Gibberish/noise + Phrase)

- benefits (Phrase)

How to examine the Test Set?

1. Using Test and Debug

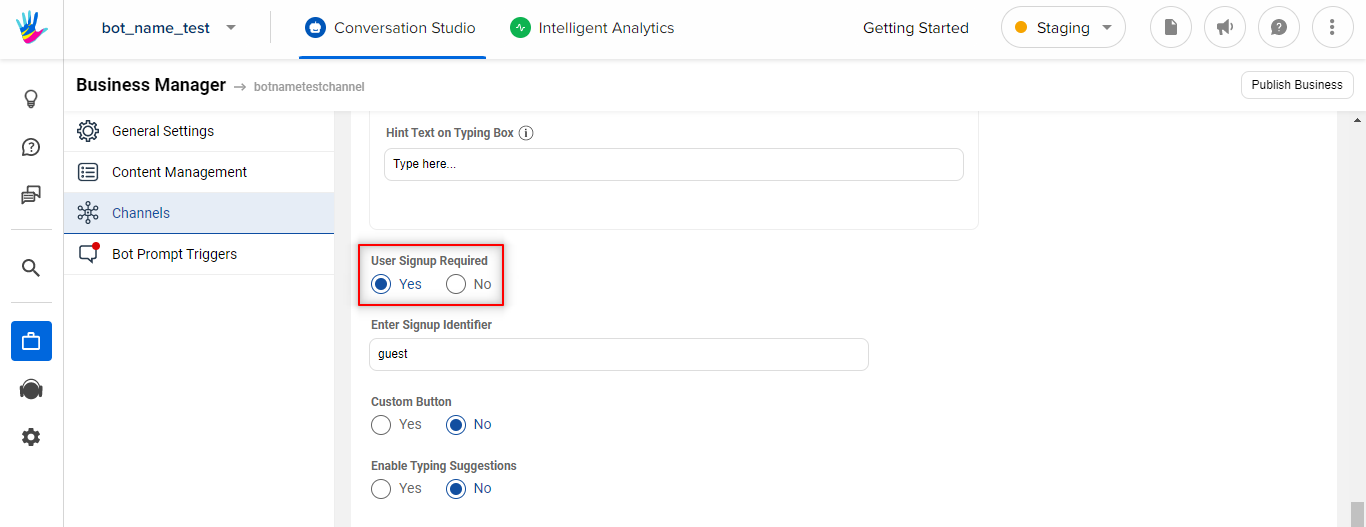

Test your bot as you build it to ensure your end-users won't experience any annoying bot breakages! You can test your bot in the following ways:

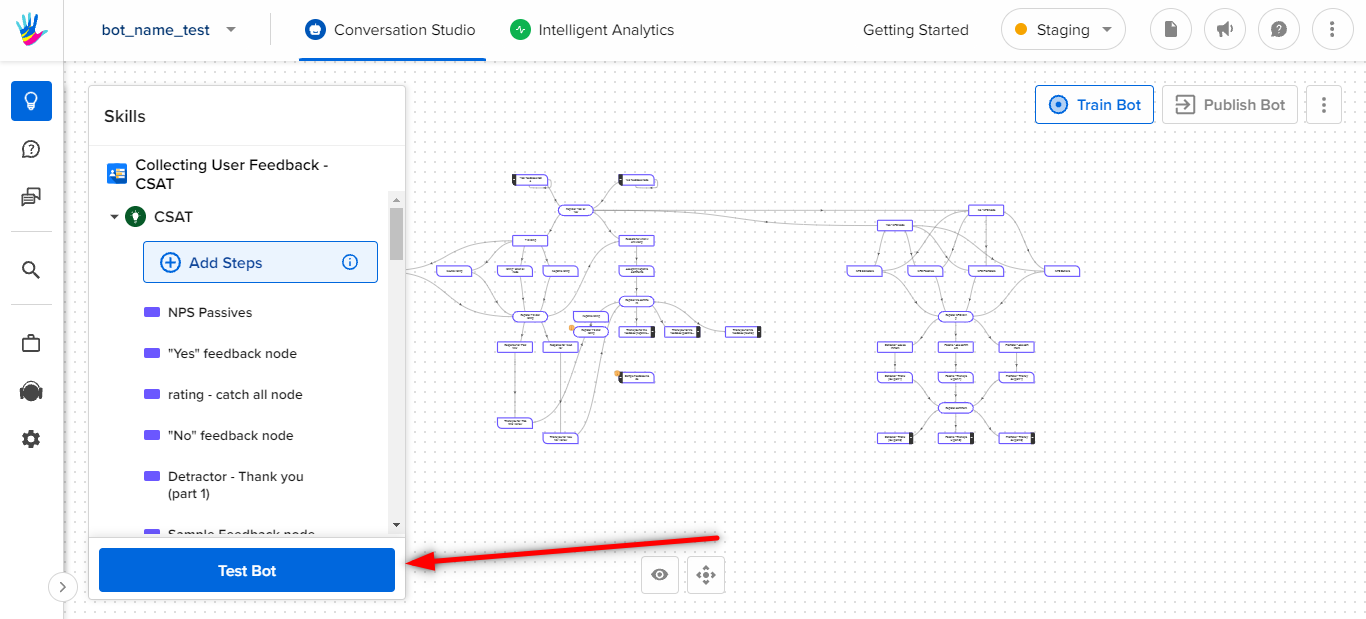

The easiest way to test your bot is to test it directly in the Conversation Studio tool. You can do this by clicking on the "Test Bot" button at the bottom of the left-side menu where you add sub-stories and s.

Another way to test your bot is within the Conversation Studio tool, select the Share Bot option, you will get a pop-up with the Test Link to test the bot.

The third way to test your bot is to deploy and connect the bot to any of the Client SDKs - iOS, Android, Web, Facebook.

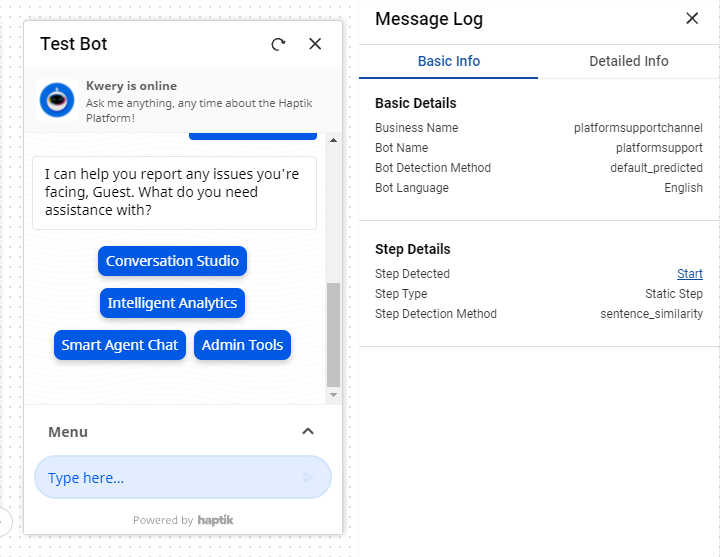

Debug the bot

On the Conversation Studio tool, you will see a Log icon near the messages you sent to the bot. The bot processes the particular message. Clicking on this icon will provide you details on how the bot is behaving, what steps were detected, what entities were collected and their value etc.

This information is useful to understand why a bot is behaving in a certain way and how to fix the bot to get the desired results. You can read more about it here.

Training data is tested during the development phase of the lifecycle. This ensures whether the bot is able to understand the user's query and if the bot is taking the user through the right conversation flow.

2. Training Suggestions

Training Suggestions aids in providing quality training data to the bot. The User Messages section for every Start step is crucial for the bot to understand the user's query. Training Suggestions module runs through all the User Messages and provides feedback to the bot builder to improve the quality of provided training data.

To know more about the Training Suggestions, click here.

Functional testing

All the conversational flows should go through a testing phase to ensure their proper functioning. If the APIs start to fail on production, the bot gives bot break responses.

We have prepared a quick guide containing all the checkpoints that need to be assessed before taking the bot to production. To read the guide, click here.

Regression testing

Haptik's Bot QA Tool makes regression testing of a bot scaleable and thus saves time. The tool runs through the entire journey of the bot.

To read more about using the Bot QA tool, read here.